From 2 Days to 1 Hour: The AI Revolution That Could Transform Game Animation

Inside Uthana's mission to beat the visual Turing test for human motion—and what it means for game development

What if you could animate game characters just by typing a text prompt or recording a quick video? In this fascinating conversation with Viren Tellis, CEO of Uthana, we explore how AI is revolutionizing game animation and making the impossible possible for indie developers.

Key takeaways from this episode:

50-80% time savings on animation workflows, with some tasks reduced from 2 days to just 1 hour

Democratizing game development by enabling teams without animators to create moving characters

The future is real-time: AI-powered NPCs that respond and move dynamically during gameplay

Three approaches to AI animation: text-to-motion, video-to-motion, and smart library search—each solving different production challenges

Check it out!

Top 5 Gaming News

Krafton Slams Ex-Subnautica Execs (The Verge): Krafton accused fired Unknown Worlds executives of abandoning Subnautica 2 development while keeping 90% of a $250 million bonus, prompting co-founder Charlie Cleveland to file a lawsuit denying the claims and asserting the game was ready for release.

Microsoft Layoffs Threaten Game Pass Future (GameRant): Microsoft's recent layoffs have devastated Xbox Game Pass's future pipeline. The cuts across major Xbox developers including Bethesda, Halo Studios, and Call of Duty studios undermine consumer confidence in Xbox Game Pass just as Microsoft needs a steady stream of new releases to compete with services like Netflix.

Gaming Ad Revenue Lags Behind Attention (Axios): Despite projected growth to $8.6 B in U.S. gaming ad spend this year, gaming still under-monetizes versus its massive audience share—leading companies like Twitch and brands to rethink how they tap into gamers.

Polygon on Microsoft’s Studio Mismanagement (Polygon): An analysis piece critiques Microsoft's pattern of acquisition mismanagement—pointing to recent cancellations and uncertainty at studios like Rare and The Initiative.

Mecha Break’s Rocky But Successful Launch (TechNode): Giant robot battle game Mecha Break has launched globally with a peak of over 130K concurrent players on Steam in 2 days, but it has been met with significant criticism from players regarding its gameplay and various technical issues.

Top 3 AI x Gaming News

Discord Pilots AI Chat Summaries for Gaming Communities (The Verge):

Discord is testing AI-powered summaries of chat threads to improve information flow in active gaming servers—poised to boost engagement and moderation efficiency .Former Rockstar Dev Claims AI Will Keep GTA 6 as Most Expensive Game (Beebom): A former Rockstar developer argues AI won't reduce game development costs, potentially making GTA 6 more expensive than future titles like GTA 7. AI's integration for complex simulations adds layers but increases expenses. This sparks debate on AI's economic impact in AAA gaming.

Video Game Actors Strike Ends with AI Protections (BBC): Performers reached a deal with 10 major game companies on July 10, 2025, ending an 11-month strike focused on AI usage in voice and motion capture. The agreement includes safeguards for actors' likenesses and compensation when AI replicates their work. This resolves key labor concerns but highlights ongoing tensions in AI's role in game development.

Supercell’s mo.co is now global and no longer requires invites.

Hence, the huge spike in downloads.

For the game to sustain, Supercell will need to deliver on its liveops.

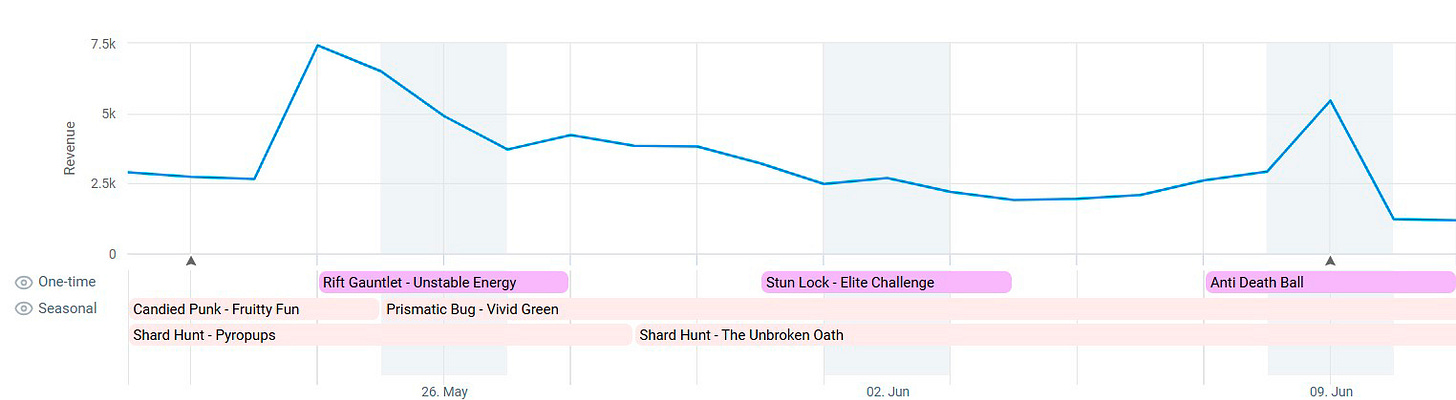

So how does Squad Busters liveops (see below) compare to mo.co liveops calendar and events?

Squad Busters LiveOps Calendar & Events:

Mo.co LiveOps Calendar & Events:

Check out the full deconstruction from AppMagic.

🎧 Listen on Spotify, Apple Podcasts, or Anchor

Speakers:

Joseph Kim. CEO at Lila Games

Tarun R. Product Manager at Lila Games

Viren Tellis. CEO and Co-founder at Uthana

AI Animation in Games: Early Days of a Promising Technology

I recently had the opportunity to sit down with Viren Tellis, CEO of Uthana, and Tarun R., Product Manager at Lila Games, to discuss the current state and future potential of AI animation in game development. What emerged was a nuanced picture of a technology that's making real progress, even as it navigates the inevitable challenges of early adoption.

Their insights offer a valuable window into where AI animation tools stand today—and perhaps more importantly, where they're headed as the technology matures.

The Efficiency Story: Early but Encouraging

Uthana reports 50-80% time savings on specific animation tasks. While these numbers apply to particular scenarios rather than entire pipelines, they point to meaningful progress:

Where AI animation shines today:

Rapid prototyping and previsualization

Automated retargeting between different rigs

Quick access to libraries of 20,000+ searchable animations

Initial motion concepts that accelerate the creative process

Areas still maturing:

Production-ready final animations

Complex stylized movements

Character-specific personality nuances

The refined details that define AAA quality

When Tarun described his team's experience, they were already deep in production with completed animations, focused on final stylization tweaks—exactly the kind of refined work that still benefits from human expertise. As he noted, "animators prefer to handle those small tweaks manually." This represents the current state, but as AI models improve and learn from more diverse data, these systems will likely tackle increasingly sophisticated animation challenges.

The Interface Challenge: A Solvable Problem

Tarun's hands-on experience revealed an interesting challenge with text-to-motion interfaces. When trying to generate a complex sequence—a character tactically entering a room with a weapon—he found it difficult to articulate the specifics through text alone.

In Tarun’s experience: "I had a lot of friction getting into that level of specificity because I couldn't imagine it at first." This highlights why video-to-motion currently sees more practical use for complex animations.

But this feels like a tractable problem. As Viren noted, the models are evolving rapidly: "I encourage anyone that tried Uthana six months ago to try it again today. If you're still not happy, try it again in another six months." The trajectory suggests that better interfaces and more sophisticated understanding of natural language animation descriptions are on the horizon.

The Data Challenge: Building the Foundation

One of the most interesting revelations from our conversation was the training data challenge. Unlike image AI that can leverage "essentially every image on the internet," animation AI faces a more constrained data environment. Motion capture data is expensive to produce and often proprietary.

Viren's approach—partnering with studios for custom models—shows pragmatic thinking about how to bootstrap better data. As more studios see value in the technology, we'll likely see a virtuous cycle: better data leads to better models, which attracts more users, who contribute more data.

This isn't an insurmountable obstacle—it's a classic marketplace problem that successful platforms have solved before.

Two Markets, Two Opportunities

The current adoption patterns reveal distinct value propositions emerging:

Indie developers are discovering new possibilities:

Creating animations without traditional animation expertise

Rapidly prototyping ideas that would have taken days

Competing visually at a level previously out of reach

Focusing resources on gameplay innovation

AA studios are exploring efficiency gains:

Accelerating the ideation process

Expanding the variety of character animations

Reducing—though not eliminating—animator workload

Testing "what if" scenarios quickly

The absence of widespread AAA adoption isn't surprising for early-stage technology. But the enthusiasm from indie and AA developers suggests a bottom-up adoption pattern that often precedes broader industry transformation.

The Business Model: Designed for Experimentation

Uthana's "unlimited creation, pay only for downloads" model struck me as particularly thoughtful. By removing friction from experimentation, they're encouraging the kind of iterative discovery that helps creators find unexpected solutions.

Current offering:

Free tier: Up to 20 seconds of downloads monthly

Paid tier: Custom pricing for studios

Philosophy: Don't penalize creative exploration

This approach recognizes that animation is inherently iterative. As the technology improves, the hit rate will increase, but the model already supports the creative process as it actually works.

Technical Progress and Potential

While some capabilities are still in development, the roadmap Viren outlined is ambitious and achievable:

Available now:

Text-to-motion generation in about 5 seconds

Video-to-motion in 2-3 minutes

Motion stitching for complex sequences

Automatic retargeting across different rigs

Coming soon:

Real-time motion generation

Style transfer to match existing character aesthetics

More sophisticated motion editing tools

Integration with standard animation pipelines

The vision of real-time, context-responsive character animation is particularly compelling. Imagine NPCs whose movements adapt dynamically to player actions, or characters whose animation style shifts with narrative tone. We're not there yet, but the path is becoming clearer.

Motion Stitching: A Clever Innovation

One feature that particularly caught my attention was motion stitching. Rather than trying to generate complex sequences in one go, Uthana lets animators combine shorter clips with AI-managed transitions. As Viren explained, you can "find motion A, find motion B, decide on the timing between them... and hit stitch. Ten seconds later, you have a coherent motion."

This pragmatic approach works with AI's current strengths while providing immediate value for creating longer sequences.

Practical Perspectives for Today and Tomorrow

For Studio Leaders:

Start experimenting with AI animation for prototyping and ideation

Consider it a complement to, not replacement for, your animation team

Watch for rapid improvements in quality and capability

Plan for a future where animators become directors of AI-generated content

For Product Managers:

Use AI tools to test animation concepts quickly

Apply them to background and crowd characters first

Build familiarity with the technology as it matures

Think about how generative animation could differentiate your game

For Investors:

The fundamentals are strong despite early-stage limitations

Data network effects will likely determine winners

Watch for improvements in quality and production adoption

Consider the long-term potential for real-time generation

Looking Forward: Measured Optimism

After our conversation, I'm convinced that AI animation represents a significant opportunity for game development, even as it works through early-stage challenges. The technology isn't ready to replace traditional animation pipelines, but it's already proving valuable for specific use cases.

What excites me most is the democratization potential. When Viren described indie developers creating animated games without ever opening Maya or Blender, it reminded me of how accessible game engines transformed who could make games. AI animation could do the same for character movement.

The key is maintaining realistic expectations while staying open to rapid improvement. As Tarun's experience showed, the technology has limitations today. But the trajectory is clear: each iteration gets better, each model learns more, and each update expands what's possible.

We're witnessing the early days of a technology that will likely transform how games get made. Not through sudden revolution, but through steady progress that gradually expands what small teams can achieve and how large teams can work more efficiently.

The animation future isn't quite here—but it's closer than many realize, and definitely worth watching.

TRANSCRIPT

**Joseph Kim** *00:00:00*

What if you could animate game characters just using a text prompt or a reference video? Today we're talking with Viren Tellis, CEO of Uthana, an AI company that recently raised over $4 million to make that possible. Their mission is to beat the visual Turing test for human motion.

We'll discuss what they do, how it's helpful, and where the technology is headed, especially with the rapid advancements in AI. Joining us is Viren, and Tarun, a product manager at Leela who is actively experimenting with AI tools for our own ambitious shooter game. Welcome, Viren and Tarun.

**Viren Tellis** *00:00:47*

Hey, great to be here.

**Tarun R.** *00:00:49*

Thank you.

**Joseph Kim** *00:00:56*

There are many generative AI services for images and videos, but we hear less about animation. Viren, could you tell us what your service is and what it's used for?

**Viren Tellis** *00:01:16*

Uthana is about getting your characters moving quickly. We focus on animating bipedal characters faster than ever before using generative AI. You don't hear about animation as much because it doesn't use LLM or Midjourney-type models. Animation requires its own models, which are harder to build, so there are fewer of them.

The easiest way to think about Uthana is addressing the problem: I have a rigged character that isn't moving, and it's going to take forever to animate. How do I get characters into my game quickly? That's what we help solve.

**Joseph Kim** *00:01:59*

Tarun, from your perspective, having worked on animations for our shooter game for a very long time, how useful could a technology like this be? I've seen some of the models and can see the potential, but what are your thoughts?

**Tarun R.** *00:02:31*

AI animation is broadly two things: text-to-3D animation and video-to-3D animation. I'm noticing a lot of use cases for video-to-3D animation. People record themselves to create a 3D avatar for social media, and game studios are using apps that record from different directions to generate high-quality, in-game animations.

I'm particularly interested in text-to-animation. While I don't see many people using it yet, it has great potential. You don't have to record yourself; you can just type what you want and make edits. If you don't know the exact motion you're looking for, you can use AI inpainting and other tools to achieve the desired look.

It's an interesting field that's coming up, but for current use cases, I see video-to-animation being used the most.

**Joseph Kim** *00:03:35*

Viren, is that what you're seeing as well? How are your customers using Uthana today, and what are its primary applications?

**Viren Tellis** *00:03:48*

I think about animation creation on Uthana in three ways. The first two methods are text-to-motion and video-to-motion, which Tarun mentioned. The third is our library of over 20,000 motions. This isn't AI; it's simply a searchable library that people use for different reasons.

When you have a specific performance in mind and are willing to wait a few minutes, video-to-motion is a great solution. It takes about two to three minutes to get an animation back on your character.

On the other hand, if you just want to experiment, see what your character looks like in motion, or create a previs, text-to-motion and the library are very valuable. By typing a prompt, you can have your character moving in about five seconds. This lets you quickly assess if the motion is what you, the director, or the producer wants.

**Joseph Kim** *00:05:15*

It sounds like there are two main applications: text-to-motion for prototyping, and video-to-motion or the library for production-ready animations. You can find something similar to what you want in the library of 20,000 motions and then apply it.

For the product managers and executives in the audience, can you characterize the cost savings of using AI for animation? In theory, the potential seems massive, but where is the industry today in practice?

**Viren Tellis** *00:06:21*

The cost savings are potentially huge, especially when you consider how much animation you can create in the same amount of time. But we don't think about it in terms of cost savings; we think about it in terms of acceleration. Acceleration might turn into cost savings, but our focus is on helping developers create their games more quickly. We want to help animators get through their pipeline faster.

The more you do that, the more likely you are to find the fun, prototype, or get your game played by users. I think speed is ultimately the most powerful thing that AI can do for animation. This can result in two outcomes. You might end up reducing overall timelines, which creates a cost saving.

Alternatively, you could keep the timelines the same but add more detail, nuance, and depth to each character. Because the time it takes to build the base set of motion—like a standard locomotion set or a couple of different swings—is so fast, your animator can spend more time on the finesse or the style of your game. It just opens up more opportunity when you have AI at your disposal.

Like many other forms of AI, the question becomes: are we really saving a lot of money, or are we actually just speeding up everything we're doing?

**Joseph Kim** *00:07:44*

Tarun, when you're looking at a service like Uthana, what are you typically looking for? What would cause you to recommend trying an AI service for animation?

**Tarun R.** *00:07:58*

Let me give you an example we tried recently. We had an animation concept of a character drinking from a jug. The idea was nice, but no one had considered how a third person would see the character, or how it would feel if the character were crouching while drinking.

This relates to what Viren was talking about. During the prototyping phase, we just typed in "a person drinking from a jug" in various poses. You could quickly see what it would roughly look like. Once we had that rough idea, we used video-to-motion and confirmed that it worked and we could go in that direction.

The main use case is to validate an idea quickly, and that's exactly what we did.

**Viren Tellis** *00:08:45*

I want to jump in on that, because what Tarun is saying about visualizing it right away is spot on. The secret sauce here is that we get our customer's character moving right away.

It's not a standard, default Uthana character that you have to take back into Maya or Blender for retargeting. You upload your character, and you will immediately see every single motion—whether from our library, from text-to-motion, or from video-to-motion—on your character in seconds or minutes.

If you like it, you can download it and then edit it keyframe-by-keyframe in Blender or Maya. The process is accelerated not just because it's AI-powered, but because it's AI-powered on your character.

**Joseph Kim** *00:09:34*

Viren, to your point about uploading your character, could you describe what that means for the audience? Are you uploading a rig? What is the asset?

And could you take one step back and walk us through the typical workflow for animating a character, and then what it looks like in the Uthana flow?

**Viren Tellis** *00:09:58*

Sure. We can start with character creation, which could come from concept art or a 2D or 3D model. Once you have the 3D model built to your specifications, you have to rig it with a skeletal structure. We are working on tools to help with rigging, but it's largely a solved problem in Blender and Maya, even if it takes a little longer.

It's also important to recognize that different games have different rigs. Some might use a Unity skeleton, some an Unreal skeleton, and some a Mixamo skeleton. Each of those rigs has a different number of bones. For example, a typical Mixamo skeleton might have three spine bones, but an Unreal skeleton might have five.

Historically, the process of getting motion from one rig to another requires a retargeting step where you do a lot of bone mapping. We have eliminated that with Uthana. Once your rig is in our system, you don't have to worry about retargeting it. Every single motion will just be on your rig, and you're ready to go when you download it for keyframe editing.

**Joseph Kim** *00:11:12*

So just so I understand, because that does sound very valuable, are you saying if I have two very different rigs—for a male character and a female character, for example—your technology could take an animation from one and automatically adapt it to the other?

**Viren Tellis** *00:11:36*

That's right, but I want to be careful about the specificity of that example. Let's say I had a male character doing a very male animation.

**Joseph Kim** *00:11:47*

Yeah.

**Viren Tellis** *00:11:47*

If I put that male animation on the female character, that female character is now going to be doing a very male animation. We haven't solved the style transfer problem yet.

**Joseph Kim** *00:11:55*

Right, right.

**Viren Tellis** *00:11:56*

That is coming soon from Uthana. But for now, any animation you have for a set of characters can be applied to any of your other characters as well, simply by uploading the next one. And that's a great way to see what works for some characters but may not work for others, all within the tool.

**Joseph Kim** *00:12:14*

Going back to the Uthana flow, what exactly are you uploading and downloading?

**Viren Tellis** *00:12:23*

You upload a rigged character.

**Joseph Kim** *00:12:25*

A rigged character.

**Viren Tellis** *00:12:26*

Once you do that, the character could be meshed the way you want it in your game, or it could just be a very plain mesh. It doesn't really matter. Whatever you upload is what you're going to visualize and see. And when you download, you're going to get that same character back, however it was rigged and meshed.

**Joseph Kim** *00:12:47*

Tarun, what do you think? You've actually tried it out, right? Do you have any questions about this process?

**Tarun R.** *00:12:54*

I do have one question about retargeting. Right now, it's a very manual process. For a stylized female animation for our game, for example, you have to go into MotionBuilder, map every bone, and then retarget.

AI retargeting is something I haven't really seen before. Is it possible to use your platform to upload an FBX rig along with an existing animation and have the AI simply retarget that animation to a different rig?

In that scenario, I’m providing the animation, and the AI’s only job is retargeting. This would allow us to retain the original animator's style.

**Viren Tellis** *00:13:45*

Yes, definitely. That is a service we provide to our paying customers.

What’s cool about this is that you can upload all your animations and think of Uthana as a central repository where they all exist. As you upload new characters, you'll see those animations on them as well. You can build a library over time.

**Joseph Kim** *00:14:17*

Let's dive into cost. Could you characterize the cost savings of using Uthana? Can you boil it down to specific numbers for a typical studio, both today and what you project for the future?

**Viren Tellis** *00:14:50*

The best way to answer that is with a few examples using time instead of dollars. In the prototyping phase, getting a character moving might take several hours or even a day. You might be buying asset packs, downloading from Mixamo, or digging through old projects.

With Uthana, you can do it much faster by typing prompts or searching the library. You can find something that might work, hit the share link, and send it to your colleagues. They can see it in a 3D player immediately without needing to open Blender or Maya. Uthana functions as a shareable canvas. On that dimension, you're looking at a 50% to 80% increase in speed.

Here’s another example from a AA game studio we worked with on style transfer. This means creating new animations that match the specific style of their existing characters. We asked their animator how long it would take to create a single four-second locomotion motion with a 135-degree turn by hand. He said it would be about two days of work.

We gave him a style transfer output from our system. It wasn't perfect, but it got him most of the way there. He said he could take that output and clean it up in about an hour. That's one-eighth to one-tenth of the original time. That's the kind of acceleration AI can deliver in the animation context.

**Joseph Kim** *00:16:57*

So, you believe a typical customer could see a 50% to 80% time savings. Is that what's possible today, or is that the ultimate endpoint you're working towards?

**Viren Tellis** *00:17:19*

It's what's possible today, but on particular dimensions like prototyping or style transfer. If you're creating a highly stylized or performance-based animation from start to finish, our video-to-motion feature might cut out the first seven hours of work, but you could still have another 30 hours to go.

**Joseph Kim** *00:17:40*

So, you're addressing specific parts of the animation workflow, not the entire process. The savings are significant for the parts you address, but the overall savings on the full workflow would be less.

**Viren Tellis** *00:17:55*

There's another dimension where 'savings' isn't the best word; it's more about 'empowerment.' We have indie studio customers with no animators or even artists on staff. They can use Uthana to get their characters moving without ever opening Maya or Blender.

For them, it's amazing. They can start building out their game, and even if it’s not perfect, things are moving and working. Then, they can send a more complete project to a professional animator or artist. In that sense, they didn't save time or money—they were empowered to do something that was previously impossible for them.

**Joseph Kim** *00:18:35*

Right, it makes the impossible possible. From that perspective, what kinds of customers are you seeing the most traction with today? Is it the indie developers who were previously unable to do this, or is it larger studios looking to save time and costs?

**Viren Tellis** *00:18:55*

I'd categorize our traction into two buckets. The first is the indie side. We have many indie users coming in every day to create motion on their own characters or on our default characters.

The second bucket is our AA game studios. We partner with them more deeply, providing a more bespoke service. They're leaning in for a variety of reasons, like prototyping, style transfer, or motion stitching, which is a big new feature for us.

We're excited about these partnerships because AA studios want to create AAA-quality games on a AA budget. AI, and not just Uthana, is an empowering tool that can help them achieve that. That's why they're excited about what we're doing on the animation side.

**Joseph Kim** *00:20:04*

Let's get into the nuts and bolts of practical usage and adoption challenges. Tarun, why aren't we massively using Utana? What would it take? What's the gap between what you're seeing now and the point where you would say, "We have to use this"?

**Viren Tellis** *00:20:28*

Okay.

**Tarun R.** *00:20:28*

From a pre-production standpoint, all AI tools are useful. The reason we're not using Uthana is because we are so far into our animations; we already have our V1 animations done. Now, it's about that final stylization tweak.

For example, we might have a female run cycle, but something just doesn't feel right. Maybe she feels like she's running a little too slow, her shoulder is leaning in, or when she crouches, she's too hunched over.

Animators prefer to handle those small tweaks manually. That's where we are—taking a very manual stylization approach.

**Joseph Kim** *00:21:12*

Viren, how do you help us with that? Could I just take a video of Tarun crouching and use that?

**Viren Tellis** *00:21:20*

There are a couple of things you can do currently, and I'll also talk about what we're doing soon. One option is to look for new locomotion moves that don't have a weird hunch. Or, you could do video-to-motion. Both of these will likely result in something where the animator still feels they need to make a tweak or an adjustment.

In the future, we're releasing a model that will produce locomotion in near real-time. You could drive the character around, see what you like, and then export particular locomotion clips in different directions or turning angles. I think that will become really valuable. As we progress that model, we'll be able to take existing animations as input, like the ones Tarun is talking about where you have some of the motion but not all of it. That's coming soon.

Frankly, this is the hard part of any AI tool. It's the equivalent of when I ask AI to write a first draft of a newsletter for me. I get the first draft, but then I'm going to spend three hours tweaking the voice and making sure it looks good before I send it out.

It's the same for animation. That first initial pass is great, but I'm still going to do the tweak because I want to make it perfect in my game, and it's going to be played over and over again. For us, it's about how we eventually get there to help with that finessing task. Right now, we're still focused on the initial task of just getting started.

**Joseph Kim** *00:23:06*

I forgot to ask an important question about costs and economics. What is your pricing model? How do you charge your customers?

**Viren Tellis** *00:23:17*

Our pricing is intentionally ambiguous on the website right now as we figure out the right approach with our partners. However, we are striving for a core tenet: unlimited creation. You shouldn't be stopped from creating, prototyping, and visualizing with Utana. You'll see that in our existing free tier, where you can prompt as many times as you'd like.

Rather, we want to eventually price on outcomes—on seconds downloaded, essentially. The idea that you get charged just because you got something back that you didn't like doesn't seem right, especially in animation.

So, we are moving to a model of unlimited creation and paying on outcomes. For now, anyone can come to Utana for free, create as much as they want, see it on their character, and download up to 20 seconds per month. If they're interested in more than that, they can just get in contact with me and we'll figure out the right plan for them.

**Joseph Kim** *00:24:27*

You mentioned motion stitching, but before we go into that, Tarun, do you have any other questions about the service or any other items that we may not have addressed?

**Tarun R.** *00:24:42*

I have a question about prompting. I noticed that prompting for animation took me a lot of time because I had a lot of friction trying to visualize and describe what I wanted.

Image prompting was also hard at first but became much easier because the models themselves are so good that even a bad prompt will give you something pretty. That gives me enough motivation to go prompt again and find something good.

With animation, I was able to get simple things like a person walking, a person doing a moonwalk, or a person walking in circles. But if I wanted something very specific, like an action sequence of a guy holding a gun and coming into a room, it required a lot of detail. He had to be in a crouch, look left, look right, and hold his weapon out. You have to be very specific.

I had a lot of friction getting into that level of specificity because I couldn't imagine it at first. When I compare text-to-motion versus video-to-motion, I would much rather just record myself. The initial friction with text-to-motion is harder. What are some of the ways you're making this easier for the user?

**Viren Tellis** *00:26:11*

You're right that it is easier to get very specific, performance-driven action out of video-to-motion. Text-to-motion is more limited in that sense, and I think it's going to be for some time, not just for our system, but for anybody's. This is partly because of how the AI is created. For image and 2D video, we are training on unbelievable amounts of data—essentially every image on the internet is potential training data.

In the context of animation, at least in the Uthana system, we think about it as training on motion capture data. The amount of available, labeled motion capture data is nowhere near what exists in the 2D video and image space. For that reason, we're going to see an evolution where simple prompts become very good first, then medium prompts will get better, and finally, longer prompts will improve. It's going to evolve over time. I encourage anyone that tried Uthana six months ago to try it again today. If you're still not happy, try it again in another six months, because it's going to continue to evolve.

But there was a second part to your question: if I'm trying to do multiple things at once or in sequential order, is text the best way to do it? At some point, it gets harder to use a single prompt, especially when trying to keep a motion cohesive. It’s better to take the component parts and stitch them together. That’s why we released a product called Motion Stitching.

With Motion Stitching, you can take smaller animations—perhaps from a text prompt or a specific video-to-motion capture—and combine them. For example, a character coming into a room, doing a gun check, putting the gun away, and then walking forward could be four different motions. Our solution lets you find motion A, find motion B, decide on the timing between them—anywhere from 0.1 to 3 seconds—and hit stitch. Ten seconds later, you have a coherent motion. It's really magical and fun because you can start to string things together and create a whole scene within Uthana, borrowing from both text- and video-to-motion.

**Tarun R.** *00:28:29*

You mentioned there isn't a lot of motion capture data available. Given that we now have video-to-motion, do you think that technology could be used to create the large library of motion capture data that you need?

**Viren Tellis** *00:28:53*

That's a great question. Video-to-motion will be informative to the AI process. However, it's important to recognize that good quality input results in good quality output, and bad quality input results in bad quality output.

A video capture from a single camera on an iPhone creates a very different input for a model than a 100-camera Vicon system in a studio. We're being very thoughtful about what inputs to use and how to use them. We also have to make sure that whatever we use has the appropriate commercial rights, so that the output is both high-quality and can be used commercially within our customers' products.

**Tarun R.** *00:29:41*

Are there any open-source text-to-motion or video-to-motion models available?

**Viren Tellis** *00:29:49*

Both exist, but I haven't seen any that are easily available for the average user to just log into and use. When I say I've seen them, I mean on research websites or GitHub, where you would have to run the code yourself to do text- or video-to-motion. For the average person, a solution like Uthana is a great place to start because you can use it for free and experience how it might work for your flow.

If you're a studio thinking about building your own models internally, there are definitely starting points out there. But it has been a two-year journey for us to get to this point, so I would caution folks from getting into this space. Just as I wouldn't tell anybody to go train their own LLM, I would advise them to use what's available. I think the same applies here in the animation context.

**Tarun R.** *00:30:53*

If you're okay getting into the details, can you walk us through how you train your LLM and share any specifics?

**Viren Tellis** *00:31:05*

First, it's important to clarify that our model is not an LLM, or large language model, which is trained on language. However, the easiest way to think about Uthana is *like* an LLM. In its simplest form, where an LLM predicts the next word in a sentence, Uthana predicts the next frame of motion. And where LLMs train on language, Uthana trains on human motion data.

We have multiple models and have used different architectures, including vector-quantized VAEs, transformers, and diffusion models. Depending on the use case, we will go with different models, but at their core, they are all learning from human motion capture data and learning how to predict the next frame of movement.

Then we think about conditioning. We can condition the model on a video, on text, or on a style of animation, and these conditions allow us to get different outputs.

For text conditioning, there is a language component. We use a small language model, not a large one, to learn the relationship between motion and language. When a user enters a prompt, one model is used to understand the relationship between the language and the motion, and a different model is used to predict what that motion should be. That is how we generate the motion output for the user.

**Tarun R.** *00:32:40*

To relate this to image generation, you have models like Stable Diffusion and then community-driven LoRAs. Someone can take the standard model, train their own custom LoRA, and generate images in a specific style.

Do you think something similar could happen with animation? For example, could a user have a generic walk cycle, train a LoRA for walking in a certain style, and then apply that to the base animation weights?

**Viren Tellis** *00:33:14*

Absolutely. We've already seen it happen within Uthana. The nuance, however, is that we don't allow this to be done open-source yet. Instead, we partner with studios that have large sets of data or motion. We bring that data in and train a custom model for them, so their data isn't interspersed with our generic models for other customers. Then, we perform fine-tuning or a Lora adaptation to get a stylized version of the character. That's a partnership we can offer today.

Is there a future where we make that more self-serve or open-source? Potentially. Once we feel comfortable that the outputs will be consistently successful, it becomes an awesome feature. But we've learned that things can break easily because of the different rigs customers have, the different orientations in their games, and the way they have set up their animation systems. These things all affect the input data, and if it doesn't match our data setup, things fail.

This is why we engage in more custom partnerships with studios to solve that problem. But style transfer—learning the style of an animator and then getting more content in that style—is absolutely something we'll see in the future. It's going to accelerate existing animators who have a concept of art and style they want to bring to the table but need a tool to speed up their process.

**Tarun R.** *00:34:52*

We should definitely try that.

**Joseph Kim** *00:34:57*

Okay.

**Viren Tellis** *00:34:58*

I'll add one other thing to think about for the future. Uthana’s vision is to bring characters to life in real time in response to the environment, the narration, and the gameplay—all of which are becoming AI-generated. We're seeing game development become both immersive and generative, and human motion needs to be responsive and generative as well.

This means that while what we're doing today is fast, it's still in a somewhat offline context. The future is real-time, in-game motion, and it's coming sooner than we all expect. I'm excited for when Uthana can share what a real-time motion model looks like.

When that comes, it's going to change how we think about animation. The game itself will bring the character to life, and the roles of the game developer and animator will be different. Their job will be about weighting the model or giving it inputs to get the right outputs, not thinking keyframe by keyframe.

Instead, they will ask: Is that the style I want? Is that the direction this person should be running? Is that the essence of my character? It's going to be a fascinating new way to think about animation, and we'll have to develop it by partnering with those who want it.

**Joseph Kim** *00:36:33*

Since we're on the topic of the future, let's dive into that. AI technology will certainly improve existing workflows, but how do you see Uthana expanding its product offerings as AI gets better?

For example, you and I have talked about videos for *Clair Obscur: Expedition 33*, where they attached iPhones to actors' faces for facial animations. Could you talk about future capabilities, whether it's facial animation or something else, that will be enabled by more capable technology?

**Viren Tellis** *00:37:35*

Facial animation is definitely one area that will continue to evolve. We already see real-time syncs between an iPhone and Unreal with Metahuman and other technologies, and that will only get better. What's also interesting is when an AI agent or an NPC can have the same realism and likeness as player characters. When I interact with an NPC at a shop or on a quest, their movement and facial responses should feel as if they were another player. That is the future we're headed toward.

Uthana is focused on making that future possible from a body perspective, but we might eventually move into facial animation as well. Other companies focused on faces are working on how to get them reacting quickly.

This will all be tied together: language input from a large language model, AI input from the game system about where a character is moving, and the motion from Uthana. I do think we'll have AI agents in the digital sense, whether for gameplay, training simulations, medical purposes, or something else. It's going to be a fascinating, fun time to bring these agents to life in a 3D world.

**Joseph Kim** *00:39:03*

You talked about agents, and a key advantage of agentic systems is scale—the ability to have not just a few agents, but a billion. In that scenario, it seems the limitation from an AI perspective would be human judgment. You could create a billion animations, but how do you know what's good?

Will an AI be able to understand what "good" is by studying the internet or a specific game? Tarun mentioned that a good animation might involve subtle things, like a character with a gun crouching down and looking left and right. Can an AI learn that kind of nuance?

It seems that the ability for an AI to understand quality might be the key limitation if everything else scales. Could you talk about that, as well as other limitations you see blocking additional cost savings, capabilities, or speed?

**Viren Tellis** *00:40:27*

We should separate the AI agent as a creation tool versus the AI agent as the goal.

In terms of AI as a creator, the limitation is definitely people. Success will be based on taste. Does the human creator have good taste? And do the tools at their disposal allow them to bring that taste into the product or character? That is a limiting factor, and the goal of companies like ours is to empower folks that have taste to do more and create more content than they could today.

Today, creating three hero characters and four NPCs might be a year's worth of work. What if I want 10 hero characters and 100 NPCs? In the long run, AI should be able to help with that problem.

Separately, when we think about the AI agent as the outcome—that NPC running around in the world—the scaling problem is about how much one person can create. I can now create 100 characters instead of three, so I've scaled it by 30x. But could I create a million? Probably not, because I would need a lot more people. I think we'll see a shift where animators or artists become tastemakers with a different type of workflow that allows them to create a lot more content.

The other competing factor here is compute. If I want to get compute done at scale, it's probably not a problem for what we're building. But if I want to do it in real time, where the character is coming to life on the device, what does that mean for the GPU on the device or in the cloud? What are we streaming? Those are the interesting technology questions we're going to be coming up on. It's too early to say whether there's a blocker there, but those are the things I think about as I look into the future.

**Joseph Kim** *00:42:35*

Let's shift from the AI to the human beings behind it. You've been on quite a journey starting this company. Congratulations on recently raising over $4 million and gaining traction.

Could you take us to the beginning of your journey starting Uthana? What was the origin of the company, and how did you get the initial funding?

**Tarun R.** *00:43:11*

Sure.

**Viren Tellis** *00:43:12*

About two and a half years ago, I was rolling off my last startup. It was in the philanthropy space and still does good in the world, but it wasn't going to be the venture-backed business I had initially envisioned. I went to my brother's house to think about what to build next. We came across all sorts of ideas but eventually came back to his area of expertise: video games. He was a video game programmer for the Call of Duty franchise.

As we talked, we realized my experience on the product side could marry his on the technology and engineering side. To give some background, I spent seven years in the ad tech industry at a company called AppNexus, the last three of which were on the product side. I was shipping real-time, algorithmic infrastructure products to grow the gross transaction volume on the platform.

I looked at ad tech and animation and, surprisingly, found a lot of similarities. In both, you have something creative on the screen—an ad or a character's motion—but the technology required to get it there is unbelievably deep. I was also used to working in real-time systems. Motion matching, where you're sampling every quarter-second to get the best frame, is very similar to the internet of ads, where every time you refresh a page, you have to run an auction and get a new ad up in a quarter-second. We got excited about these analogies.

Then, in the fall of 2022, ChatGPT came out commercially. We knew generative AI was going to change every industry and asked, "How does it change this one?" That's where we saw the vision that characters should just come to life in real time. We decided to go make that a reality. It's daunting to think we had that foresight then, because there has been a lot of blood, sweat, and tears to get to where we are today.

We started with a three-pronged approach. First, we interviewed over 100 animators in the early days to confirm there was a customer pain point. It was very clear there was an opportunity. Second, we needed to ensure the AI research was going to be productive, which we found to be the case as academics were pushing the space forward. Third, we had to get funding.

In the spring of 2023, we got into Andreessen Horowitz's Speedrun accelerator in their first batch, and that really kicked things off for us. We were able to coalesce around a pre-seed round, raise capital, hire engineers, and start the building process towards what Uthana is today.

**Joseph Kim** *00:46:01*

You mentioned "blood, sweat, and tears." Could you walk us through some of the hard parts of building this business?

**Viren Tellis** *00:46:08*

In the early days, we had an idea of how 3D animation works, but we didn't realize how painful it would be to get characters of any rig to move. There are so many nuances and specifics for each rig, and making them all move is a very hard problem that we spent months on—perhaps even too long.

Another challenge was that there are many different approaches to getting a model working. I mentioned three or four different architectures earlier, and we didn't know which one was right. To some degree, we still don't; the answer could be different for different use cases. So you try different things, see failures, put in months of work, and then show it to a customer who says, "That's horrible." You just have to go back to the drawing board.

The artistic and stylistic expectations of our industry are very high, and we continue to struggle with that today. Folks will come in and say, "Oh, I can't use this." And that's fair. We're going to continue to get better. But there are people using it and a lot of people enjoying it. For us, it's about marrying what we know we can build today and in the next three months with the customers that are excited about leaning into AI to accelerate their pipelines.

**Joseph Kim** *00:47:41*

Continuing on the topic of lessons learned, what have been the biggest lessons from your entrepreneurial journey over the last few years? What advice would you give to other entrepreneurs trying to start a company?

**Viren Tellis** *00:47:59*

Zooming out, there are a couple of big lessons. First, having a vision early on is very important. It serves as a North Star for you individually, so you don't get lost in all the turns and switchbacks of a startup.

It also serves as a guiding light for attracting the talent most passionate about what you're building. I have to give props to the Uthana team for what we've built. I'm not an engineer; all the technology is being built by others on the team, and they are amazing. They're excited about our vision and our mission to beat a visual Turing test for human motion.

Another big lesson is to not listen to the masses when it comes to fundraising advice, but to think about your individual situation. Sometimes the first thing you need to do is fundraise, and other times it's to build. Most VCs will tell you to build first and fundraise later, but I would caution that there are times when you should just fundraise first.

If investors don't believe in you based on the team, the deck, the vision, the mission, and customer interviews, having a bad demo won't help. Stick to those first five things. If they don't believe in you, maybe change one of those.

This was a lesson I learned between my two startups. With the first startup, we built a working product but couldn't get funded. With the second, I built nothing, and it still got funded. You have to ask yourself why. Part of it is that AI and games are hot, and philanthropy, sadly, is not. But it's also a function of crafting the story and the vision the right way to get that funding.

I'll share those two lessons for now, but I'm happy to go into more specifics.

**Joseph Kim** *00:50:05*

Tarun, do you have any final questions for Viren?

**Tarun R.** *00:50:09*

Yes, a couple. With AI motion, I'm seeing a lot of work being done in 3D, but not much in 2D. 2D games have 2D rigs and animations, but there isn't much AI development in that space.

What's your take on that? Why aren't people doing it? Is it not a profitable market to go after?

**Viren Tellis** *00:50:35*

That's a great question. I have some guesses, though I'm not an expert in this specific area. We have seen that 2D talking heads are becoming very exciting and popular right now. You might ask why we don't see the full body, and I think it's because having temporal control over the full body is a harder problem. It's partly a data problem, and I hypothesize that it needs to be solved by leveraging the benefits of 3D.

If you want the best body motion in 2D, you should probably create it in 3D and then just play it back in 2D. The ability to learn human body motion control purely from 2D videos is limited. Someone will eventually crack this problem, and my guess is they will use 3D to do it. This isn't something we're focused on because we're much more excited about the real-time, immersive experience of a game.

In an offline context, where you can spend several minutes creating the content, I think the best way is with 3D. But that leads back to the data problem. These are just my hypotheses; I'm not sure who is the best at 2D animation right now.

However, I have heard of several well-funded startups doing anime specifically. Since anime is ultimately 2D and involves the full body, they are likely experiencing and hopefully solving some of these problems so that more content of this type can be created.

**Tarun R.** *00:52:28*

Okay, this next question is slightly more technical. You were talking about real-time animation. Recently, I've been playing a lot with Blender MCP, which stands for Model Context Protocol.

For those who don't know, if you have an LLM or any AI model that needs to talk to software like Blender or Maya, the MCP acts as a translation layer in between. It holds the functions for how to communicate with that specific tool. For example, a trading platform in India called Zerodha launched an MCP that lets you talk directly to your financial account and have it act as an advisor.

I've been playing with the Blender MCP, connecting it to an LLM and telling it what to do in Blender, which it then executes. This gave me an idea: What if Uthana created an MCP for Maya? An animator could just create three or four key poses in Maya without having to go anywhere else.

The MCP could then, perhaps through an API call, read those poses from Maya and automatically fill in all the other keyframes. What do you think of that idea?

**Viren Tellis** *00:54:11*

There are three things to unpack on that idea. First, can Uthana create plugins or APIs for keyframe tools? The answer is yes, and we probably will. We haven't done that yet because, as we've discussed, creating products through a plugin involves even more blood, sweat, and tears. You'd be working through a plugin the whole time, whereas the ability to iterate and adapt in a web-based UI is much faster. But we'll get there.

Second, animations are very bespoke and different. Once we start going into Maya, we have to accept that our customers will be using all different types of rigs, conventions, and sizes. We have to solve that problem, which is not easily solved. We've somewhat solved it in terms of an interface between the user and our product, but extending that to different tools is another challenge.

Finally, I think about the MCP as a way to empower users who are not as technically proficient with a tool. You could have an MCP for Maya with or without Uthana. It sounds great, but who would it be great for? It's going to be great for people who don't know Maya.

My guess is that an expert animator in a AAA studio who knows Maya really well isn't going to get a lot of value out of an MCP. Arguably, if the MCP can do things a lot faster than they could otherwise, there might be value. But so far, we're not near that yet. A Maya animator can adjust an arm to the right place on a temporal basis much more easily by hand than by trying to describe it to an LLM.

**Tarun R.** *00:56:04*

I'm not very technically proficient with Blender, but I tried to download an emote from a website and retarget it to our rig. Since the rigs weren't the same, I had to manually match about 106 or 107 bones.

So I went into Blender and fed it instructions: read all the bones from both rigs and then map them for me. I was able to get it to work to some extent, but not very well. I think that approach works for someone who's not very proficient.

**Viren Tellis** *00:56:44*

That's a good place where, if we were partnering, we'd say, "Let's make sure your rig is in Uthana and works well." Then you could just upload the video of that emote, and you're set.

**Joseph Kim** *00:56:53*

Well, I think those are all the questions from us, Viren. Do you have any last message for our audience?

In terms of getting in touch, I'll include links to you and the Uthana website in the show notes. But is there any other way that people can get a hold of you?

**Viren Tellis** *00:57:13*

They can follow me on LinkedIn or Twitter; my handle is vitellus. You can also email me directly at V@Uthana.

I would love to hear from anyone who either has ideas for generative AI for 3D animation or has feedback on a product of ours that they've used. I'm always interested in that feedback, so please reach out and get in touch. We'd be happy to chat.

**Joseph Kim** *00:57:41*

Awesome. All right, thanks so much for your time. Thanks, Viren. Thanks, Tarun. And for our audience, we will catch you next time. Thanks, guys.

**Viren Tellis** *00:57:48*

Thanks a lot.